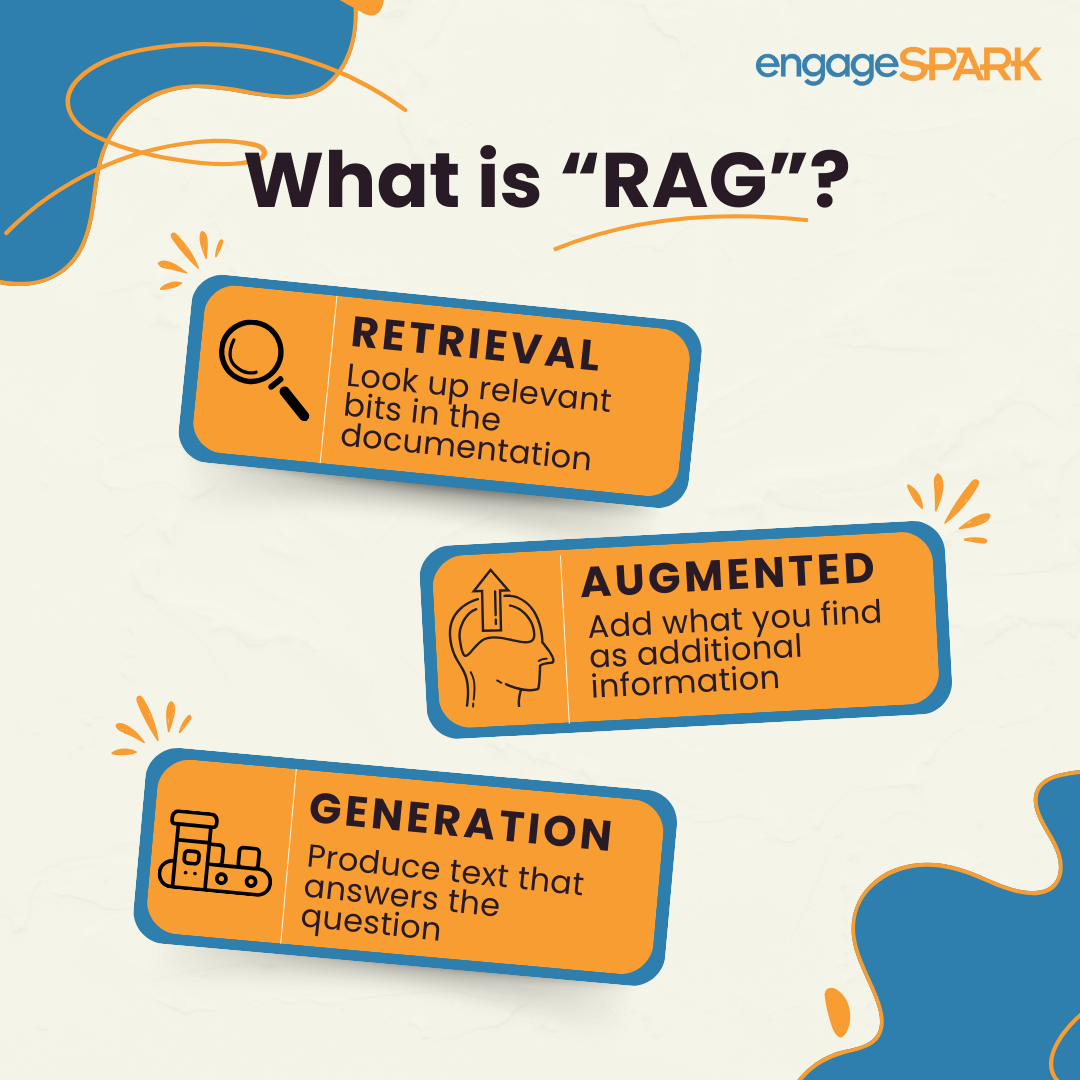

How does an AI chatbot become an “expert” chatbot? Somehow it needs to learn or use your existing documentation to answer questions. And usually it does that with “Retrieval-Augmented Generation”, RAG for short.

That’s a mouthful, right? But it’s actually simple. In this blog post, we’ll explain what this means, why you need it, and what the difficult bit is.

This blog post is part of the “Chatbots for LMICs” series, Find the other parts in the series introduction.

What is RAG?

So, Retrieval-Augmented … what?

I know, they made it sound complicated, but it’s really simple: It means you allow the chatbot to read your documentation before answering.

Think of an open-book exam. When there is some formula or fact you forgot, you can quickly flip through the book, find that particular page, and (ideally) answer the question.

That’s what an expert chatbot does with RAG: it looks in its knowledge base for a few relevant pages, and uses them to answer the question.

Its knowledge base was created based on your documentation. And if it finds the right bits (more on that later), it can be quite the expert.

Now that we understand what is meant, let’s look again at the words behind RAG:

- Retrieval: You look something up.

- Augment: You improve something.

- Generation: That’s what AI chatbots do, they generate text.

So, RAG just means: you improve the text generation with a lookup. The idea makes sense, right? No idea why they couldn’t find a simpler term.

But wait a minute: why do we need this RAG?

Why you need RAG for chatbots

ChatGPT is super knowledgeable, right? Other AIs based on similar Large Language Models (LLMs) are, too. So, why do you need to add even more knowledge with RAG?

Let’s use an example: A typical Water, Sanitation, and Hygiene (WASH) campaign.

Your organization is helping communities to fight water-born diseases. You do this by improving access to clean water, but hygiene is also important—a typical WASH project.

So, you want to help people understand about the applicable diseases and how to fight them—mostly with proper hygiene. While you distribute booklets, and have a nice SMS campaign that pushes out reminders, you think a chatbot might help you provide information 24/7.

You test this. You go to ChatGPT and ask for hygiene information. But the information it provides is generic, some of it is wrong, and the sources it gives you are not always approved by your organization.

Instead, you’d like the chatbot to stick to official WASH information by the CDC and EPA. And you have a few PDFs with information specific to the countries you work in. The chatbot should use those, too.

That’s when you need RAG—when what the chatbot knows off-the-shelf isn’t good enough.

With RAG you can provide custom knowledge and the chatbot uses it. Sounds great, right? So, what’s the catch?

The catch: Finding the right page

There are two problems with RAG:

First, your chatbot has to find the right pages to look at.

Let’s say you’re asking the bot about how to install a water filter on your tap.

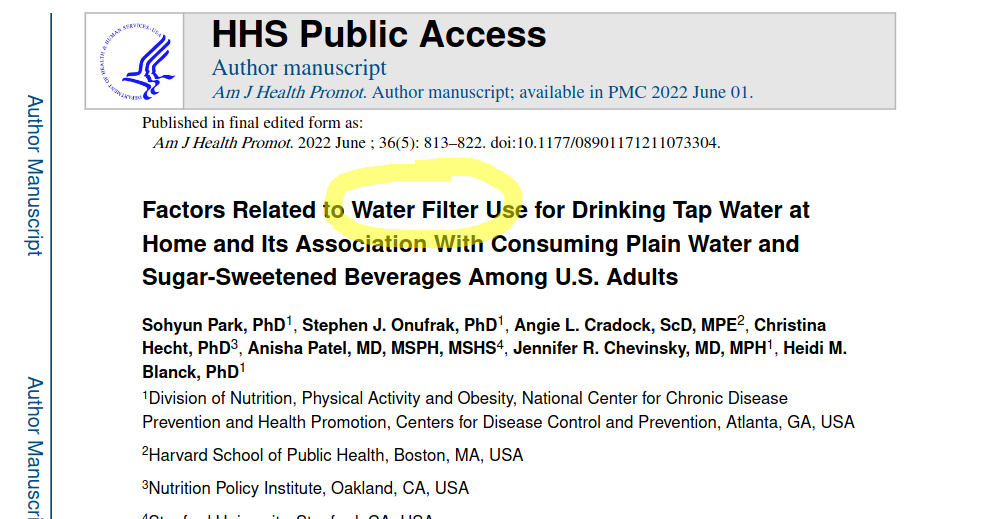

The chatbot then might search its knowledge base for water filter-related information and comes up with text from this PDF titled “Factors Related to Water Filter Use for Drinking Tap Water at Home and Its Association With Consuming Plain Water and Sugar-Sweetened Beverages Among U.S. Adults”.

That’s possible: the phrase “water filter” is in the title, and appears throughout the article. If it’s part of the knowledge base, it might pop up.

Unfortunately, the article is not helping the bot explain how to install a water filter. Having found only that article, the chatbot will not be able to answer the question.

That’s why finding the right information is super important to make the “R” (Retrieval) in “RAG” work.

The good news is that you don’t need to figure this out. There are tools out there that take care of this. And yes, those tools, too, might need some fine-tuning. But that’s what your chatbot platform or provider (like we at engageSPARK) are there for.

Deep dive: If you’re interested in learning more about finding a relevant document in a knowledge base, look for articles or Youtube videos on “semantic search”.

To complete our RAG overview, we have to understand one last thing, and that has to do with the pages themselves. Ready to jump head down the rabbit hole?

What are pages?

The truth is that RAG chatbots don’t read pages of anything. Your best practice guides and other documentation are split up in chunks of texts and stored in the chatbots knowledge base (a “vector store”).

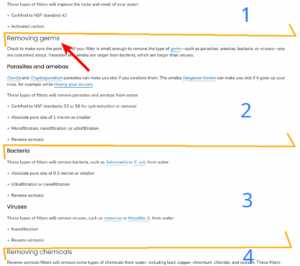

Consider the following picture. It shows a CDC page about water filters. The orange lines show the borders of possible chunks—much smaller than they usually might be, but it helps illustrate the point.

In this picture we have four chunks. Now assume we’re searching for information about germs in water.

Screenshot of the article “About Choosing Home Water Filters”, US CDC, last accessed Aug 15, 2025

This search might return chunk 2, because the word “germ” is present. Unfortunately, it would then miss out on the important information in chunk 3. When it starts listing germs, the chatbot would then be talking about how to combat parasites and amebas, and know nothing about bacteria and viruses.

So, that needs a bit of fine-tuning until the bot finds the right chunks, and the chunks have complete enough information. This, too, is something you shouldn’t need to worry about too much, but that might help you understand when a chatbot is “ignoring” information. It might just not see it.

Conclusion

RAG chatbots look for relevant information that they can use to answer a question or query.

To provide that information, your documentation is cut into chunks and stored in a database. The size of those chunks, and how to find the most relevant ones requires a bit of fine-tuning, but there are tools for that, so fear not.

Hope that helped! Want to know more about our AI chatbots that people can use via SMS or WhatsApp? Say hi in chat!